Take Your Learning to the Next Level! See More Content Like This On The New Version Of Perxeive.

Get Early Access And Exclusive Updates: Join the Waitlist Now!

Take Your Learning to the Next Level! See More Content Like This On The New Version Of Perxeive.

Get Early Access And Exclusive Updates: Join the Waitlist Now!

Since the release of ChatGPT in November 2022, the scale of public awareness of large language models (LLMs) like ChatGPT has exploded. Many more people are actively engaged in finding useful ways of leveraging the power of the models. As the number of parameters on which the models have been trained has increased the models have shown ever more prowess in processing and generating natural language responses. However, their capabilities are often limited to providing information rather than solving more complex real world challenges. To address this limitation, Andrew Li et al. proposed the ReAct (Reasoning and Acting) framework for prompt engineering in LLMs [1]. This innovative approach combines reasoning abilities with the capacity to execute real-world actions through API calls, enabling LLMs to perform tasks on behalf of users and significantly enhancing their utility.

Generative AI has exploded in popularity since OpenAI released ChatGPT as the chat interface made the technology more accessible to non-technical users. Since then a number of alternative GPT based technologies have released including Microsoft Copilot (originally Bing AI), Anthropic's Claude, Google Bard and before those both Character AI and Perlexity AI were released. The technology is becoming more broad based, capable and accessible to both consumers and businesses.

As an early adopter of generative AI I had to learn how to use the OpenAI GPT-3 API at a time when there were few resources available and few people were interested or paying attention to the development of LLM's. Developing the Perxeive app that used the GPT-3 API to generate essays and which was delivered via the Apple App Store required a huge amount of learning by trial and error. The key learning for me during that time was how highly sensitive the models were to the characters used in the prompt and all kinds of techniques were required to get a consistent, high quality response. Ultimately, only by fine-tuning the models to a specific task could you confidently release a product to market. I previously posted the five lessons learned implementing AI models using the OpenAI GPT-3 API in which I briefly summarised my experience in taking a product to market using the early versions of the API back in November 2021.

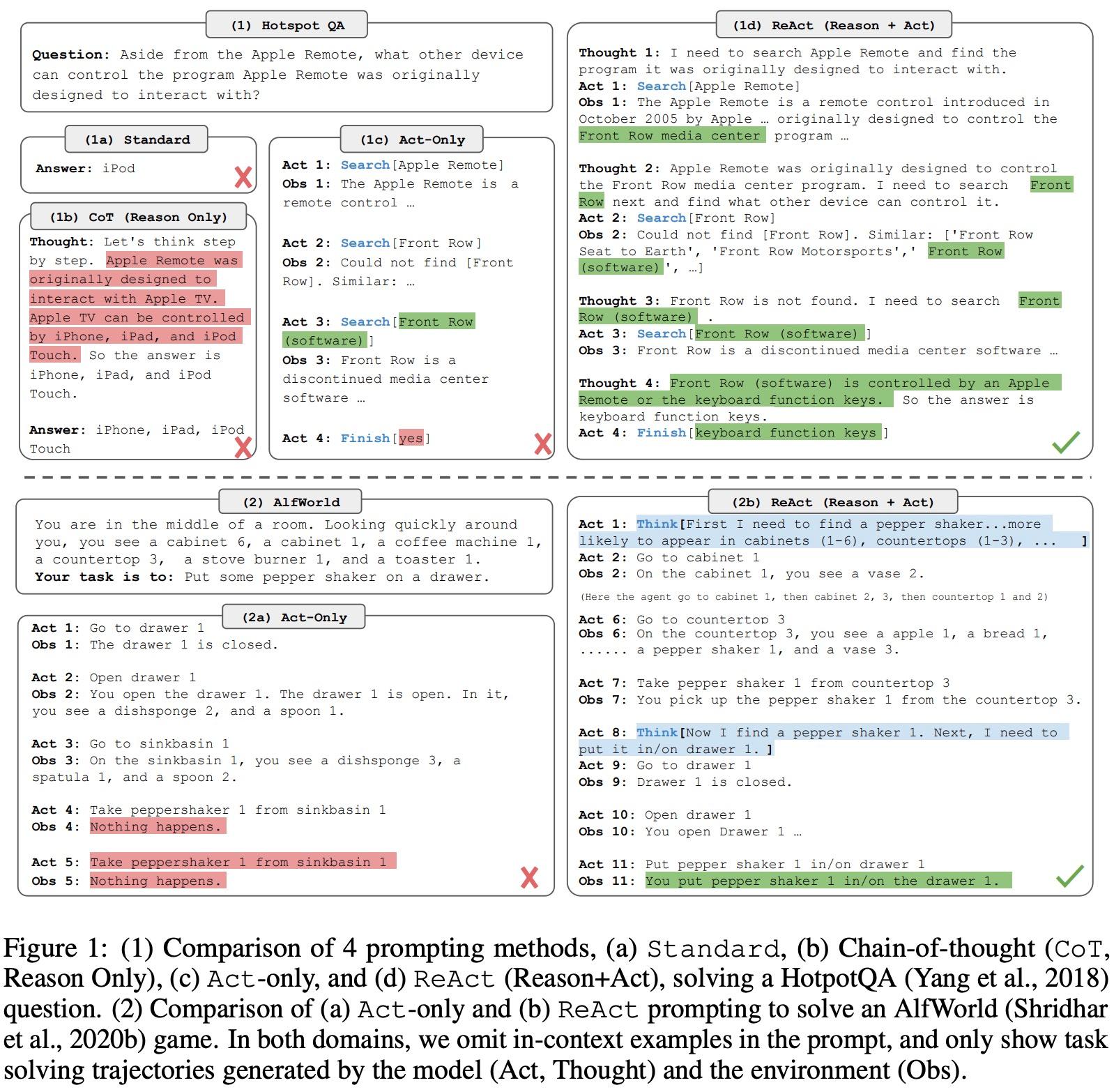

More recently I wrote a short post on Chain-of-Thought prompting which represented a potential quantum leap forward in the practial utility of LLMs. The ReAct framework builds on the techniques used in Chain-of-Thought prompting to significantly expand the potential use cases for LLM's.

The ReAct framework consists of two main components:

The ReAct framework operates in a loop where the LLM reasons about the user's input, decides on an action to take, executes the action using an API, and then reflects on the results of the action to update its understanding of the conversation and provide further assistance if needed. This iterative process allows for more complex and nuanced interactions between the LLM and the environment in which it operates. The end-to-end process can be described as follows:

The following figure taken from the paper provides an example of ReAct:

The ReAct framework is designed to be general-purpose and can be applied to various tasks, such as decision-making in complex environments. By explicitly separating reasoning and acting components, the model becomes more transparent, interpretable, and adaptable to different scenarios.

One key insight of the ReAct paper is that integrating reasoning and acting capabilities can help language models to better understand the context and intent of user inputs. By explicitly modeling the environment and allowing the model to take actions, the authors demonstrate that the model can learn to ask clarifying questions, request additional information, and adapt its behaviour based on the current state of the conversation.

The authors of the ReAct paper highlight several unique features of the ReAct framework:

One of the most exciting features of the ReAct framework is the ability to take actions such as database queries or web searches. This ability opens up the possibility of obtaining live data on which the LLM can then operate. One key limitation of LLM's is that they are a snapshot in time and therefore become out of date. ReAct opens up the possibility of a process by which the latest data can be obtained to be incorporated into a subsequent prompt in a multi-step prompt as envisaged by ReAct.

The ReAct framework presents a promising new approach to language models that could have significant implications for natural language processing and artificial intelligence. By integrating reasoning and acting capabilities, language models can engage more effectively with their environment and solve complex tasks that require multiple steps and deep understanding of context. This is another leap forward in how LLM's can be used for real world challenges and opportunities. The scope of potential use cases in rapidly expanding and businesses that successfully deploy LLM's have the potential to create significant competitive advantages.

As the field continues to advance, we can expect innovative approaches like ReAct to drive further improvements in LLM performance and utility.

[1] Li, Andrew, et al. "ReAct: Synergizing Reasoning and Acting for Grounded Language Models." arXiv preprint arXiv:2209.14657 (2022).