Take Your Learning to the Next Level! See More Content Like This On The New Version Of Perxeive.

Get Early Access And Exclusive Updates: Join the Waitlist Now!

Take Your Learning to the Next Level! See More Content Like This On The New Version Of Perxeive.

Get Early Access And Exclusive Updates: Join the Waitlist Now!

In the rapidly emerging field of generative artificial intelligence, large language models have made significant strides in understanding and generating human-like text. However, their ability to reason and solve complex problems has been somewhat limited. A new and highly useful technique called "Chain-of-Thought (CoT) prompting", has been proposed that opens up an exciting set of opportunities to apply generative AI to real world challenges. This new technique was introduced and explained in the research paper "Chain of Thought Prompting Elicits Reasoning in Large Language Models" by Wei et al. The following post provides a brief summary of the paper.

Generative AI has exploded in popularity since OpenAI released ChatGPT in November 2022 as the chat interface made the technology more accessible to non-technical users. Since then a number of alternative GPT based technologies have released including Microsoft Copilot (originally Bing AI), Anthropic's Claude, Google Bard and before those both Character AI and Perlexity AI were released. The technology is becoming more broad based, capable and accessible to both consumers and businesses.

Having been an early adopter of generative AI I had to learn prompt engineering at a time when there were few resources available. The only meaningful learning resources were libraries of example prompts which were very limited in scope and the tutorials provided in the OpenAI documentation. I previously posted the five lessons learned implementing AI models using the OpenAI GPT-3 API in which I briefly summarised my experience in taking a product to market using the early versions of the API back in November 2021. The key learning at that time was how highly sensitive the models were to the characters used in the prompt and all kinds of techniques were required to get a consistent, high quality response. Ultimately, only by fine-tuning the models to a specific task could you confidently release a product to market.

Prompt engineering is progressing rapidly and Chain-of-Thought prompting offers the potential of a quantum leap forward in the practial utility of LLMs.

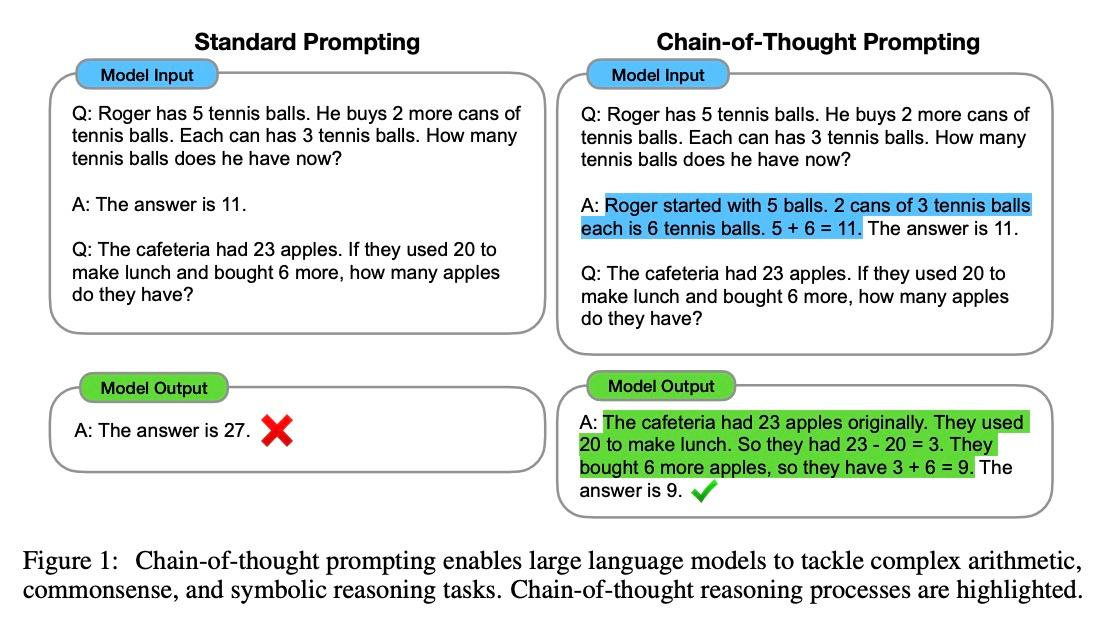

CoT prompting is a method that guides large language models to generate intermediate steps or "thoughts" before arriving at the final answer. This approach encourages the model to engage in multi-step reasoning, similar to how a human might solve a problem. The key benefit of this technique is that it helps the model produce more accurate and explainable answers, particularly for complex problems requiring multi-step reasoning or problem-solving skills.

The following figure is taken from the paper and provides an example of a chain-of-thought prompt compared to a typical prompt:

At its core, CoT prompting mimics the human thought process by breaking down complex problems into smaller, manageable steps. Here's a simplified summary of how it works:

To evaluate the effectiveness of CoT prompting, the papers' authors conducted experiments using various language models, including GPT-3, PaLM and Codex. They compared CoT prompting with traditional direct prompting methods and found that it significantly improved the model's performance on a range of reasoning tasks, such as:

While CoT prompting offers promising results, it is not without its challenges. Some potential limitations include:

Future research could focus on investigating methods to automatically generate CoT prompts and improving models' ability to handle more complex reasoning tasks.

CoT prompting is a significant step towards enabling large language models to perform multi-step reasoning, providing valuable insights into how these models can be guided to think and solve problems more like humans. By harnessing the power of CoT prompting, recruiters for CEO positions and other professionals can unlock new possibilities in leveraging AI for complex problem-solving tasks, ultimately driving innovation and growth in their organizations.

About The Author

I build businesses, both as independent startups and as new initiatives within large global companies. Having been an early adopter of generative AI as a member of the beta program of OpenAi's GPT-3 model, I released my first LLM based product, Perxeive, to the Apple App Store in November 2021. Since then, I have continued to develop my experience and skills of applying generative AI to real world challenges and opportunities. If you are looking to build a business and require leadership then please contact me via the About section of this website.